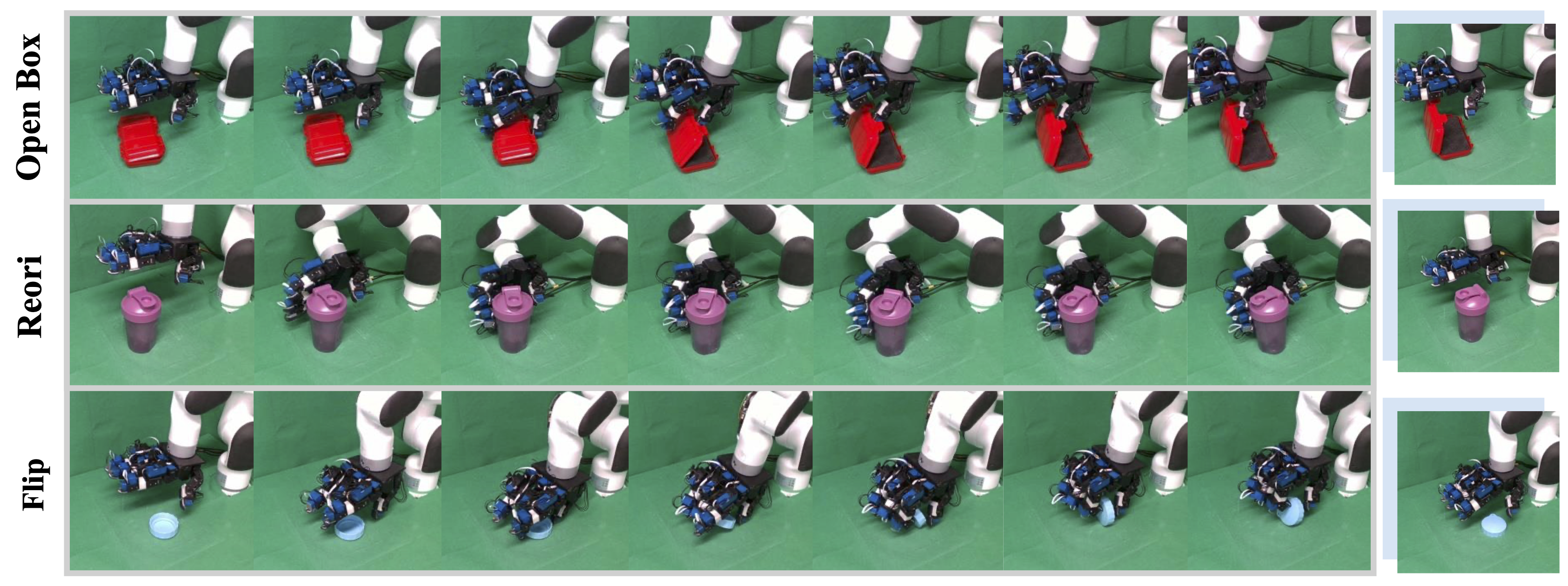

Tactile sensing plays a vital role in enabling robots to perform fine-grained, contact-rich tasks. However, the high dimensionality of tactile data, due to the large coverage on dexterous hands, poses significant challenges for effective tactile feature learning, especially for 3D tactile data, as there are no large standardized datasets and no strong pretrained backbones. To address these challenges, we propose a novel canonical representation that reduces the difficulty of 3D tactile feature learning and further introduces a force-based selfsupervised pretraining task to capture both local and net force features, which are crucial for dexterous manipulation. Our method achieves an average success rate of 78% across four fine-grained, contact-rich dexterous manipulation tasks in realworld experiments, demonstrating effectiveness and robustness compared to other methods. Further analysis shows that our method fully utilizes both spatial and force information from 3D tactile data to accomplish the tasks

Without Spatial Ablation: We observed that after the robot reaching the object and attempting to grasp it, the thumb oscillated randomly, preventing further manipulation, which indicates our policy leverages spatial information for forming gross hand poses.

Without Force Ablation: Although the robot managed to reach and grasp the object, it consistently failed due to an unstable grasping or continuously adjusting grasp, which indicates our policy leverages force information for more fine-grained adjustments.

Our policy can generalize to most of the unseen objects that have varying color, geometry and dynamics.